Exploring Concurrency Issues with Philosophers and Go

Understand key concepts in concurrency by solving the dining philosophers problem step by step while discussion different subtle problems and intricacies. Full code in Go is provided towards the end.

I have been recently looking to improve and enhance my understanding of concurrency at a deeper level and I stumbled upon a very nice and fun problem while doing so: the "Dining Philosophers" problem which might sound familiar to those who already had a course in concurrent computing. The problem was initially suggested by Edsger Dijkstra (Yes, the same mind behind the infamous Dijkstra’s algorithm for shortest path) in an exam for his students 😅 .

There are several solutions online (e.g. Wikipedia) for this problem. Nevertheless, I decided to write a post about it to walk you step by step into solving the problem in easy steps using Go and discussing different interesting concurrency problems as we face them on the way.

Problem Statement

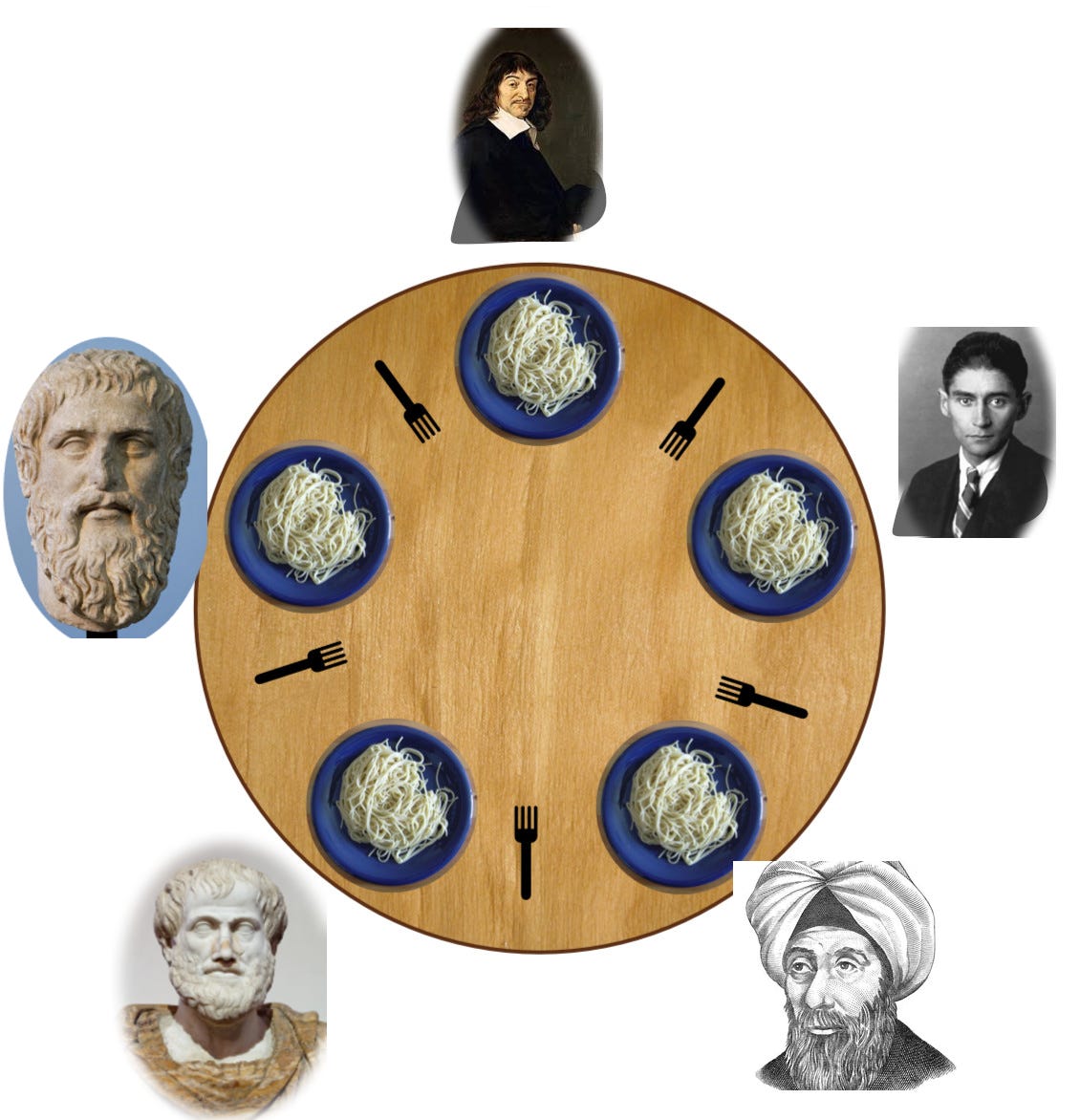

The problem states that we have 5 philosopher sitting at a round table with 5 chopsticks (or forks), each philosopher's life is an infinite loop of thinking and eating. As they are trying to eat noodles, they need to use two chopsticks, one on their left and one on their right (the original version from Dijkstra uses Spaghetti with two forks). In order for a philosopher to eat they need to pickup the two chopsticks on both sides (left & right), when they finish eating they put down both chopsticks. If a philosopher has only one chopstick available, they can't eat and they will have to wait for the second chopstick to be put down (this problem variant is credited to the book "The Art of Multiprocessor Programming").

The goal is to build an algorithm that would allow for all philosophers to eat in a concurrent fashion, meaning that each philosopher could start eating at any time, making the situation nondeterministic.

Mutual Exclusion and Critical Sections

This problem highlights the notion of mutual exclusion in concurrent systems. If you're familiar with mutual exclusion, feel free to skip this section.

Mutual exclusion is a synchronization mechanism that prevents two or more threads from accessing shared resources simultaneously, which could be disastrous and result in corrupted data—a situation known as a data race. To better illustrate this concept, imagine a road intersection, the shared resource would be the intersection, also known as the "Critical Section" in computer science. If we consider each car to be a thread, we want to avoid having multiple cars in the intersection at the same time. Fortunately, we have traffic lights to prevent these dangerous situations. The same principle applies to concurrency, where we have algorithms that help us avoid simultaneous access to the same resource by multiple threads.

Going back to programming, imagine a simple function that runs concurrently across several threads and just increments a shared counter. Running such a function as a thread or a go-routine in a for loop through 100 iterations (with a wait group to avoid exiting early), we might expect a final counter equal to 99 if starting from 0. However, this probably won't be the case because of data races.

You see, increments aren't just one instruction but syntactic sugar for three operations: read the counter, modify it, then write the new value. Two or more threads might read the same value (for example, 42), add one, and write 43 instead of 44. This could happen multiple times in one execution. Note that I highlighted "could" and "might" to suggest the probabilistic nature of this behavior—we might get lucky in one execution and have no data race. That's what makes concurrency and resource sharing inherently difficult: reproducing data race bugs isn't easy because it's up to the scheduler to run those threads/processes/go-routines, making partially blind when it comes to the execution order.

To solve this, different languages provide various mechanisms for synchronizing access to critical sections. In Go, we have the excellent sync package from the standard library that provides tools making concurrency easier to handle and reason about. The main struct we'll use to solve this data race issue is `sync.Mutex`, which locks access to a specific code section so only one thread can execute it at a time, then unlocks it. This is similar to threads taking turns holding tokens before running their code. Let’s look at an example of a proper concurrent increment in Go:

func main(){

var wg sync.WaitGroup

counter := 0

var mux sync.Mutex

// Now properly synchronized to avoid data races

for i := 0; i < 100; i++{

wg.Add(1)

go func(){

defer wg.Done()

mux.Lock()

counter++

mux.Unlock()

}()

}

wg.Wait() // Ensures that we don't leave the main method prematurely

fmt.Printf("Counter: %d", counter) // Now correctly outputs 100

}Before incrementing the shared counter (the critical section of our program), we first lock execution through mux.Lock(), increment the counter, then unlock it through mux.Unlock().

Mutual exclusion is a broad topic in concurrent system design with lots of ideas to explore, but this should provide enough foundation to tackle the dining philosophers problem. That being said, This lock and unlock mechanism is not a magic solution for the mutual exclusion problem — issues like deadlocks and starvation can still arise.

A Simple Algorithm:

Our first approach to solving this problem will be to simulate the philosophers' actions as directly as possible. The core of the algorithm is to model each philosopher as an independent actor who needs to acquire two resources (chopsticks, represented by mutexes) before they can perform their main task (eating).

The logical sequence of actions for any single philosopher looks like this:

Request the left chopstick.

Request the right chopstick.

Eat for a short period.

Release the left chopstick.

Release the right chopstick.

Think for a short period.

This cycle of eating and thinking defines the philosopher's entire life. Although this sequence seems logical, it contains a subtle but crucial flaw. When every philosopher attempts to follow this exact same logic simultaneously, they can collectively create a deadlock.

But let's not get ahead of ourselves, we will talk more about deadlocks in the next section. First, let's translate this algorithm into Go code and see it in action.

We'll start by creating a Philosopher struct to hold their ID and the mutexes for their left and right chopsticks. We will also define Eat() and Think() methods that directly correspond to the steps in our algorithm. The Live() method will then serve as a wrapper to run this cycle a set number of times.

We combine everything in the main function. Here, we'll set up the dining table by creating the philosophers and chopsticks. We use the modulo operator (%) when assigning the right chopstick to create the circular arrangement. Finally, we'll use a sync.WaitGroup to ensure our main program waits for all philosopher goroutines to finish their cycles before exiting.

Here is the complete, runnable code for our initial solution:

package main

import (

"fmt"

"sync"

"time"

)

// Philosopher holds the id and the mutexes for the left and right chopsticks

type Philosopher struct {

id int

leftChopstick *sync.Mutex

rightChopstick *sync.Mutex

}

// Think simulates the thinking activity

func (p *Philosopher) Think() {

fmt.Printf("Philosopher %d is thinking.\n", p.id)

time.Sleep(200 * time.Millisecond)

}

// Eat simulates the eating activity by locking both chopsticks

func (p *Philosopher) Eat() {

p.leftChopstick.Lock()

p.rightChopstick.Lock()

fmt.Printf("Philosopher %d is eating.\n", p.id)

time.Sleep(200 * time.Millisecond)

p.rightChopstick.Unlock()

p.leftChopstick.Unlock()

}

// Live is a wrapper for the Eat and Think activities for k cycles

func (p *Philosopher) Live(k int, wg *sync.WaitGroup) {

defer wg.Done()

for i := 0; i < k; i++ {

p.Eat()

p.Think()

}

}

func main() {

// There are 5 philosophers and 5 chopsticks

numPhilos := 5

// Create the chopsticks (mutexes)

chopSticks := make([]sync.Mutex, numPhilos)

for i := 0; i < numPhilos; i++ {

chopSticks[i] = sync.Mutex{}

}

// Create the philosophers and assign their chopsticks

philos := make([]Philosopher, numPhilos)

for i := 0; i < numPhilos; i++ {

philos[i] = Philosopher{

id: i + 1, // Use 1-based indexing for clarity

leftChopstick: &chopSticks[i],

rightChopstick: &chopSticks[(i+1)%numPhilos],

}

}

numCycles := 3

var wg sync.WaitGroup

// Start a goroutine for each philosopher

for i := 0; i < numPhilos; i++ {

wg.Add(1)

go philos[i].Live(numCycles, &wg)

}

// Wait for all philosophers to finish

wg.Wait()

}Gotcha #1: Deadlocks

If we run this program several times, we will encounter a bug where Go signals a deadlock. A deadlock is a situation in concurrent software where a given thread is waiting/sleeping for another thread to release the lock on a resource, while the second thread is also waiting for a resource to be released by the first thread, which results in a circular wait. Deadlocks are a quite common issue when writing concurrent code and should be taken into account when designing such systems. A formal set of conditions to recognize deadlocks are known as Coffman conditions, which I recommend reading about.

In our case, the deadlock situation happens when every philosopher picks up their left chopstick at the exact same time. All k philosophers/goroutines will keep waiting endlessly for their right chopstick to be unlocked, which is the left chopstick already picked up by the philosopher sitting next to them.

Luckily, there is a very simple solution to solve this issue: we can break the symmetry of the problem by forcing at least one philosopher to start eating by picking up their right fork first. We can do this by forcing the first philosopher to start with their right chopstick (so the rightChopstick mutex is locked first), breaking the symmetry.

Some jargon: A deadlock is when two threads or processes keep waiting on each others without making true progress, a gridlock is the same concept applied to several threads/processes. A livelock is when threads are waiting on each other but they are still progressing on their own

The updated full code that avoid deadlocks is on Github along with a couple of notes on parallel programming and concurrent systems that I took while studying the topic.

Gotcha #2: Starvation

The solution provided on Github works pretty well for most cases; however, it has another slight, very subtle issue...

Starvation is another problem in concurrent systems; however, it is more subtle compared to deadlocks. It happens when a process is perpetually denied necessary resources to proceed. While some threads in the system make progress, others are stuck waiting indefinitely and are therefore "starved."

In our dining scenario, starvation might arise in the following situation: Imagine three philosophers sitting next to each other: A, B, and C. If A starts eating, they lock the chopstick between them and B. B must now wait for A to finish. But as soon as A finishes, C (on the other side of B) starts eating before B gets a chance, locking B's other chopstick. This could happen if, for instance, the scheduler repeatedly favors A and C over a "slower" philosopher B. This cycle could continue indefinitely, resulting in B being perpetually starved.

In practice, starvation can be a rare edge case, but it's a serious fairness issue that should be accounted for. Luckily, some brilliant researchers came up with algorithms that are both deadlock-free and starvation-free.

Two famous examples are Peterson's algorithm and Lamport's Bakery Algorithm.

Peterson's algorithm is an elegant solution for two threads. It uses a shared

turnvariable and a boolean array to ensure that if both threads want to enter a critical section, they politely take turns, guaranteeing one won't have to wait forever.Lamport's Bakery Algorithm, proposed by Leslie Lamport, generalizes this to N threads. It works exactly how people wait in a bakery: a thread gets a ticket number before entering the critical section. The thread with the lowest ticket number gets to proceed first. If two threads get the same number, their unique process ID is used as a tie-breaker. This system ensures fairness and is free from both deadlock and starvation.

Implementing the Bakery algorithm would make this article more complex than it needs to be, so I decided to keep this section mostly theoretical. You can find many implementations online if you are interested.

Gotcha 2.1: Memory Models and Compiler Optimizations

Yes, concurrency is hard. We are not yet done with potential edge cases. I promise this is the last one I will mention, and it is to some extent quite advanced, so feel free to skip it if you wish.

You see, Lamport's Bakery Algorithm is a provably correct solution for mutual exclusion. However, its formal proof relies on an assumption called sequential consistency. This is a strong memory model where all operations appear to execute in some global order, and the operations for each individual thread appear in the order specified by its code. In simple terms, the CPU and compiler are not allowed to reorder memory operations.

Under this strict model, the Bakery algorithm works perfectly, and we can avoid deadlocks and starvation issues. However, for performance reasons, most modern CPUs and compilers do not guarantee sequential consistency by default. They employ weak (or relaxed) memory models, giving them the freedom to reorder instructions where it sees fit. This can break algorithms that rely on a specific ordering of memory reads and writes. Let’s briefly discuss the models used in Go, C++ and Java.

Go's memory model is not sequentially consistent. However, it provides a clear "happens-before" guarantee, this happens-before essentially means that if event B was caused by event A, then A will execute before B. In addition, primitives in the

syncpackage, like mutexes and channels, create explicit synchronization points. When you use them correctly, you are guaranteed that memory operations before a "send" (e.g.,mutex.Unlock()) are visible to operations after a corresponding "receive" (e.g.,mutex.Lock()). This allows you to write correct concurrent code without worrying about the low-level details of memory reordering. If you are interested in diving deeper into Go’s memory model, I suggest reading this excellent article from Laisky’s blog.C++ and Java also have weak memory models. They provide tools like

std::atomic(C++) andvolatileorjava.util.concurrent(Java) that allow programmers to enforce stricter ordering constraints on memory operations when needed, but it is not the default behavior and can lead to some performance issues at high scale.

Concurrency is hard, but…

This problem, although quite classical, shows a great deal of problems that arise when designing concurrent solutions, but luckily programming languages are getting better at synchronization and concurrency, an example of that is Go which is nowadays frequently used for concurrent programs.

I hope you enjoyed reading this piece, it tooks me some time to write but I definitely learned a lot as well and hope that you

did. If you like such deep dives into concurrency, infrastructure and distributed systems, subscribe to the Clusters & Coffee free newsletter!

Full Source Code can be found here on Github

Great article! I enjoyed reading this. Would be cool to see a sequel on this where we visualize the eat/think rate of each philosophers to see if some strange pattern emerges. What if we could model the distance too whereby A is more likely to grab B's chopstick etc...

Anyways I'm getting ahead of myself. Great writeup!

Great post. You may ad Go Playground links for the code examples.